Thought you were rid of us? Not quite: in a last hurrah, Clément and I come back with a final pair of distribution estimation recaps — this time on results from the actual conference!

Gautam Kamath on Efficient Density Estimation via Piecewise Polynomial Approximation by Siu-On Chan, Ilias Diakonikolas, Rocco A. Servedio, and Xiaorui Sun

Density estimation is the question on everyone’s mind. It’s as simple as it gets – we receive samples from a distribution and want to figure out what the distribution looks like. The problem rears its head in almost every setting you can imagine — fields as diverse as medicine, advertising, and compiler design, to name a few. Given its ubiquity, it’s embarrassing to admit that we didn’t have a provably good algorithm for this problem until just now.

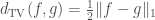

Let’s get more precise. We’ll deal with the total variation distance metric (AKA statistical distance). Given distributions with PDFs  and

and  , their total variation distance is

, their total variation distance is  . Less formally but more intuitively, it upper bounds the difference in probabilities for any given event. With this metric in place, we can define what it means to learn a distribution: given sample access to a distribution

. Less formally but more intuitively, it upper bounds the difference in probabilities for any given event. With this metric in place, we can define what it means to learn a distribution: given sample access to a distribution  , we would like to output a distribution

, we would like to output a distribution  such that

such that  .

.

This paper presents an algorithm for learning  -piecewise degree-

-piecewise degree- polynomials. Wow, that’s a mouthful — what does it mean? A

polynomials. Wow, that’s a mouthful — what does it mean? A  -piecewise degree-

-piecewise degree- polynomial is a function where the domain can be partitioned into

polynomial is a function where the domain can be partitioned into  intervals, such that the function is a degree-

intervals, such that the function is a degree- polynomial on each of these intervals. The main result says that a distribution with a PDF described by a

polynomial on each of these intervals. The main result says that a distribution with a PDF described by a  -piecewise degree-

-piecewise degree- polynomial can be learned to accuracy

polynomial can be learned to accuracy  using

using  samples and polynomial time. Moreover, the sample complexity is optimal up to logarithmic factors.

samples and polynomial time. Moreover, the sample complexity is optimal up to logarithmic factors.

Now this is great and all, but what good are piecewise polynomials? How many realistic distributions are described by something like “ for

for  but

but  for

for  and

and  …”? The answer turns out to be a ton of distributions — as long as you squint at them hard enough.

…”? The answer turns out to be a ton of distributions — as long as you squint at them hard enough.

The wonderful thing about this result is that it’s semi-agnostic. Many algorithms in the literature are God-fearing subroutines, and will sacrifice their first-born child to make sure they receive samples from the class of distributions they’re promised — otherwise, you can’t make any guarantees about the quality of their output. But our friend here is a bit more skeptical. He deals with a funny class of distributions, and knows true piecewise polynomial distributions are few and far between — if you get one on the streets, who knows if it’s pure? Our friend is resourceful: no matter the quality, he makes it work.

Let’s elaborate, in slightly less blasphemous terms. Suppose you’re given sample access to a distribution  which is at total variation distance

which is at total variation distance  from some

from some  -piecewise degree-

-piecewise degree- polynomial (you don’t need to know which one). Then the algorithm will output a

polynomial (you don’t need to know which one). Then the algorithm will output a  -piecewise degree-

-piecewise degree- polynomial which is at distance

polynomial which is at distance  from

from  . In English: even if the algorithm isn’t given a piecewise polynomial, it’ll still produce something that’s (almost) as good as you could hope for.

. In English: even if the algorithm isn’t given a piecewise polynomial, it’ll still produce something that’s (almost) as good as you could hope for.

With this insight under our cap, let’s ask again — where do we see piecewise polynomials? They’re everywhere: this algorithm can handle distributions which are log-concave, bounded monotone, Gaussian,  -modal, monotone hazard rate, and Poisson Binomial. And the kicker is that it can handle mixtures of these distributions too. Usually, algorithms fail catastrophically when considering mixtures, but this algorithm keeps chugging and handles them all — and near optimally, most of the time.

-modal, monotone hazard rate, and Poisson Binomial. And the kicker is that it can handle mixtures of these distributions too. Usually, algorithms fail catastrophically when considering mixtures, but this algorithm keeps chugging and handles them all — and near optimally, most of the time.

The analysis is tricky, but I’ll try to give a taste of some of the techniques. One of the key tools is the Vapnik-Chervonenkis (VC) inequality. Without getting into the details, the punchline is that if we output a piecewise polynomial which is “close” to the empirical distribution (under a weaker metric than total variation distance), it’ll give us our desired learning result. In this setting, “close” means (roughly) that the CDFs don’t stray too far from each (though in a sense that is stronger than the Kolmogorov distance metric).

Let’s start with an easy case – what if the distribution is a  -piecewise polynomial? By the VC inequality, we just have to match the empirical CDF. We can do this by setting up a linear program which outputs a linear combination of the Chebyshev polynomials, constrained to resemble the empirical distribution.

-piecewise polynomial? By the VC inequality, we just have to match the empirical CDF. We can do this by setting up a linear program which outputs a linear combination of the Chebyshev polynomials, constrained to resemble the empirical distribution.

It turns out that this subroutine is the hardest part of the algorithm. In order to deal with multiple pieces, we first discretize the support into small intervals which are roughly equal in probability mass. Next, in order to discover a good partition of these intervals, we run a dynamic program. This program uses the subroutine from the previous paragraph to compute the best polynomial approximation over each contiguous set of the intervals. Then, it stitches the solutions together in the minimum cost way, with the constraint that it uses fewer than  pieces.

pieces.

In short, this result essentially closes the problem of density estimation for an enormous class of distributions — they turn existential approximations (by piecewise polynomials) into approximation algorithms. But there’s still a lot more work to do — while this result gives us improper learning, we yearn for proper learning algorithms. For example, this algorithm lets us approximate a mixture of Gaussians using a piecewise polynomial, but can we output a mixture of Gaussians as our hypothesis instead? Looking at the sample complexity, the answer is yes, but we don’t know of any computationally efficient way to solve this problem yet. Regardless, there’s many exciting directions to go — I’m looking forward to where the authors will take this line of work!

-G

Clément Canonne on  -Testing, by Piotr Berman, Sofya Raskhodnikova, and Grigory Yaroslavtsev [1])

-Testing, by Piotr Berman, Sofya Raskhodnikova, and Grigory Yaroslavtsev [1])

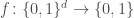

Almost every — if not all — work in property testing of functions are concerned with the Hamming distance between functions, that is the fraction of inputs on which they disagree. Very natural when we deal for instance with Boolean functions  , this distance becomes highly arguable when the codomain is, say, the real line: sure,

, this distance becomes highly arguable when the codomain is, say, the real line: sure,  and

and  technically disagree on almost every single input, but should they be considered two completely different functions?

technically disagree on almost every single input, but should they be considered two completely different functions?

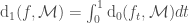

This question, Grigory answered by the negative; and presented (joint work with Piotr Berman and Sofya Raskhodnikova [2]) a new framework for testing real-valued functions ![f\colon X^d\to[0,1]](https://s0.wp.com/latex.php?latex=f%5Ccolon+X%5Ed%5Cto%5B0%2C1%5D&bg=ffffff&fg=404040&s=0&c=20201002) , less sensitive to this sort of annoying “technicalities” (i.e., noise). Instead of the usual Hamming/

, less sensitive to this sort of annoying “technicalities” (i.e., noise). Instead of the usual Hamming/ distance between function, they suggest the more robust

distance between function, they suggest the more robust  (

( ) distance

) distance

![\mathrm{d}_p(f,g) = \frac{ \left(\int_{X^d} \lvert f(x)-g(x) \rvert^p dx\right)^{\frac{1}{p}} }{ \left(\int_{X^d} \mathbf{1} dx \right)^{\frac{1}{p}} } = \mathbb{E}_{x\sim\mathcal{U}(X^d)}\left[\lvert f(x)-g(x) \rvert^p\right]^{\frac{1}{p}}\in [0,1]](https://s0.wp.com/latex.php?latex=%5Cmathrm%7Bd%7D_p%28f%2Cg%29+%3D+%5Cfrac%7B+%5Cleft%28%5Cint_%7BX%5Ed%7D+%5Clvert+f%28x%29-g%28x%29+%5Crvert%5Ep+dx%5Cright%29%5E%7B%5Cfrac%7B1%7D%7Bp%7D%7D+%7D%7B+%5Cleft%28%5Cint_%7BX%5Ed%7D+%5Cmathbf%7B1%7D+dx+%5Cright%29%5E%7B%5Cfrac%7B1%7D%7Bp%7D%7D+%7D+%3D+%5Cmathbb%7BE%7D_%7Bx%5Csim%5Cmathcal%7BU%7D%28X%5Ed%29%7D%5Cleft%5B%5Clvert+f%28x%29-g%28x%29+%5Crvert%5Ep%5Cright%5D%5E%7B%5Cfrac%7B1%7D%7Bp%7D%7D%5Cin+%5B0%2C1%5D&bg=ffffff&fg=404040&s=0&c=20201002)

(think of  as being the hypercube

as being the hypercube  or the hypergrid

or the hypergrid ![[n]^d](https://s0.wp.com/latex.php?latex=%5Bn%5D%5Ed&bg=ffffff&fg=404040&s=0&c=20201002) , and

, and  being 1 or 2. In this case, the denominator is just a normalizing factor

being 1 or 2. In this case, the denominator is just a normalizing factor  or

or  )

)

Now, erm… why?

- because it is much more robust to noise in the data;

- because it is much more robust to outliers;

- because it plays well (as a preprocessing step for model selection) with existing variants of PAC-learning under

norms;

norms;

- because

and

and  are pervasive in (machine) learning;

are pervasive in (machine) learning;

- because they can.

Their results and methods turn out to be very elegant: to outline only a few, they

- give the first example of testing monotonicity testing (de facto, for the

distance) when adaptivity provably helps; that is, a testing algorithm that selects its future queries as a function of the answers it previously got can outperform any tester that commits in advance to all its queries. This settles a longstanding question for testing monotonicity with respect to Hamming distance;

distance) when adaptivity provably helps; that is, a testing algorithm that selects its future queries as a function of the answers it previously got can outperform any tester that commits in advance to all its queries. This settles a longstanding question for testing monotonicity with respect to Hamming distance;

- improve several general results for property testing, also applicable to Hamming testing (e.g. Levin’s investment strategy [3]);

- provide general relations between sample complexity of testing (and tolerant testing) for various norms (

);

);

- have quite nice and beautiful algorithms (e.g., testing via partial learning) for testing monotonicity and Lipschitz property;

- give close-to-tight bounds for the problems they consider;

- have slides in which the phrase “Big Data” and a mention to stock markets appear (!);

- have an incredibly neat reduction between

and Hamming testing of monotonicity.

and Hamming testing of monotonicity.

I will hereafter only focus on the last of these bullets, one which really tickled my fancy (gosh, my fancy is so ticklish) — for the other ones, I urge you to read the paper. It is a cool paper. Really.

Here is the last bullet, in a slightly more formal fashion — recall that a function  defined on a partially ordered set is monotone if for all comparable inputs

defined on a partially ordered set is monotone if for all comparable inputs  such that

such that  , one has

, one has  ; and that a one-sided tester is an algorithm which will never reject a “good” function: it can only err on “bad” functions (that is, it may sometimes accept, with small probability, a function far from monotone, but will never reject a monotone function).

; and that a one-sided tester is an algorithm which will never reject a “good” function: it can only err on “bad” functions (that is, it may sometimes accept, with small probability, a function far from monotone, but will never reject a monotone function).

Theorem.

Suppose one has a one-sided, non-adaptive tester  for monotonicity of Boolean functions

for monotonicity of Boolean functions  with respect to Hamming distance, with query complexity

with respect to Hamming distance, with query complexity  . Then the very same

. Then the very same  is also a tester for monotonicity of real-valued functions

is also a tester for monotonicity of real-valued functions ![f\colon X\to [0,1]](https://s0.wp.com/latex.php?latex=f%5Ccolon+X%5Cto+%5B0%2C1%5D&bg=ffffff&fg=404040&s=0&c=20201002) with respect to

with respect to  distance.

distance.

Almost too good to be true: we can recycle testers! How? The idea is to express our real-valued  as some “mixture” of Boolean functions, and use

as some “mixture” of Boolean functions, and use  as if we were accessing these. More precisely, let

as if we were accessing these. More precisely, let ![f\colon [n]^d \to [0,1]](https://s0.wp.com/latex.php?latex=f%5Ccolon+%5Bn%5D%5Ed+%5Cto+%5B0%2C1%5D&bg=ffffff&fg=404040&s=0&c=20201002) be a function which one intends to test for monotonicity. For all thresholds

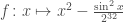

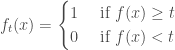

be a function which one intends to test for monotonicity. For all thresholds ![t\in[0,1]](https://s0.wp.com/latex.php?latex=t%5Cin%5B0%2C1%5D&bg=ffffff&fg=404040&s=0&c=20201002) , the authors define the Boolean function

, the authors define the Boolean function  by

by

All these  are Boolean; and one can verify that for all

are Boolean; and one can verify that for all  ,

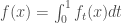

,  . Here comes the twist: one can also show that the distance of

. Here comes the twist: one can also show that the distance of  to monotone satisfies

to monotone satisfies

i.e. the  distance of

distance of  to monotone is the integral of the Hamming distances of the

to monotone is the integral of the Hamming distances of the  ‘s to monotone. And by a very simple averaging argument, if

‘s to monotone. And by a very simple averaging argument, if  is far from monotone, then at least one of the

is far from monotone, then at least one of the  ‘s must be…

‘s must be…

How does that help? Well, take your favorite Boolean, Hamming one-sided (non-adaptive) tester for monotonicity,  : being one-sided, it can only reject a function if it has some “evidence” it is not monotone — indeed, if it sees some violation: i.e., a pair

: being one-sided, it can only reject a function if it has some “evidence” it is not monotone — indeed, if it sees some violation: i.e., a pair  with

with  but

but  .

.

Feed this tester, instead of the Boolean function it expected, our real-valued  ; as one of the

; as one of the  ‘s is far from monotone, our tester would reject

‘s is far from monotone, our tester would reject  ; so it would find a violation of monotonicity by

; so it would find a violation of monotonicity by  if it were given access to

if it were given access to  . But being non-adaptive, the tester does exactly the same queries on

. But being non-adaptive, the tester does exactly the same queries on  as it would have done on this

as it would have done on this  ! And it is not difficult to see that a violation for

! And it is not difficult to see that a violation for  is still a violation for

is still a violation for  : so the tester finds a proof that

: so the tester finds a proof that  is not monotone, and rejects.

is not monotone, and rejects.

Wow.

— Clément.

Final, small remark: one may notice a similarity between  testing of functions

testing of functions ![f\colon[n]\to[0,1]](https://s0.wp.com/latex.php?latex=f%5Ccolon%5Bn%5D%5Cto%5B0%2C1%5D&bg=ffffff&fg=404040&s=0&c=20201002) and the “usual” testing (with relation to total variation distance,

and the “usual” testing (with relation to total variation distance,  ) of distributions

) of distributions ![D\colon [n]\to[0,1]](https://s0.wp.com/latex.php?latex=D%5Ccolon+%5Bn%5D%5Cto%5B0%2C1%5D&bg=ffffff&fg=404040&s=0&c=20201002) . There is actually a quite important difference, as in the latter the distance is not normalized by

. There is actually a quite important difference, as in the latter the distance is not normalized by  (because distributions have to sum to

(because distributions have to sum to  anyway). In this sense, there is no direct relation between the two, and the work presented here is indeed novel in every respect.

anyway). In this sense, there is no direct relation between the two, and the work presented here is indeed novel in every respect.

Edit: thanks to Sofya Raskhodnikova for spotting an imprecision in the original review.

[1] Slides available here: http://grigory.github.io/files/talks/BRY-STOC14.pptm

[2] http://dl.acm.org/citation.cfm?id=2591887

[3] See e.g. Appendix A.2 in “On Multiple Input Problems in Property Testing”, Oded Goldreich. 2013. http://eccc-preview.hpi-web.de/report/2013/067/

vertices and

directed edges. For a vertex

, let us denote

the set of vertices that are reachable from

. There are two known ways to compute sets

(all at the same time):

, which is the best known bound for sparse graphs;

. This algorithm is better for dense graphs.

. Namely, the following theorem was proved back in 1994:

-multiplicative approximation for every

with running time

.

be a function that assigns random independent and uniform reals between 0 and 1 to every vertex. Let us define

. Show how to compute values of

for all vertices

at once in time

.

, denote

the distribution of the minimum of

independent and uniform reals between 0 and 1. Suppose we receive several independent samples from

with an unknown value of

. Show that we can obtain a

-multiplicative approximation of

with probability

using as few as

samples.